Introduction

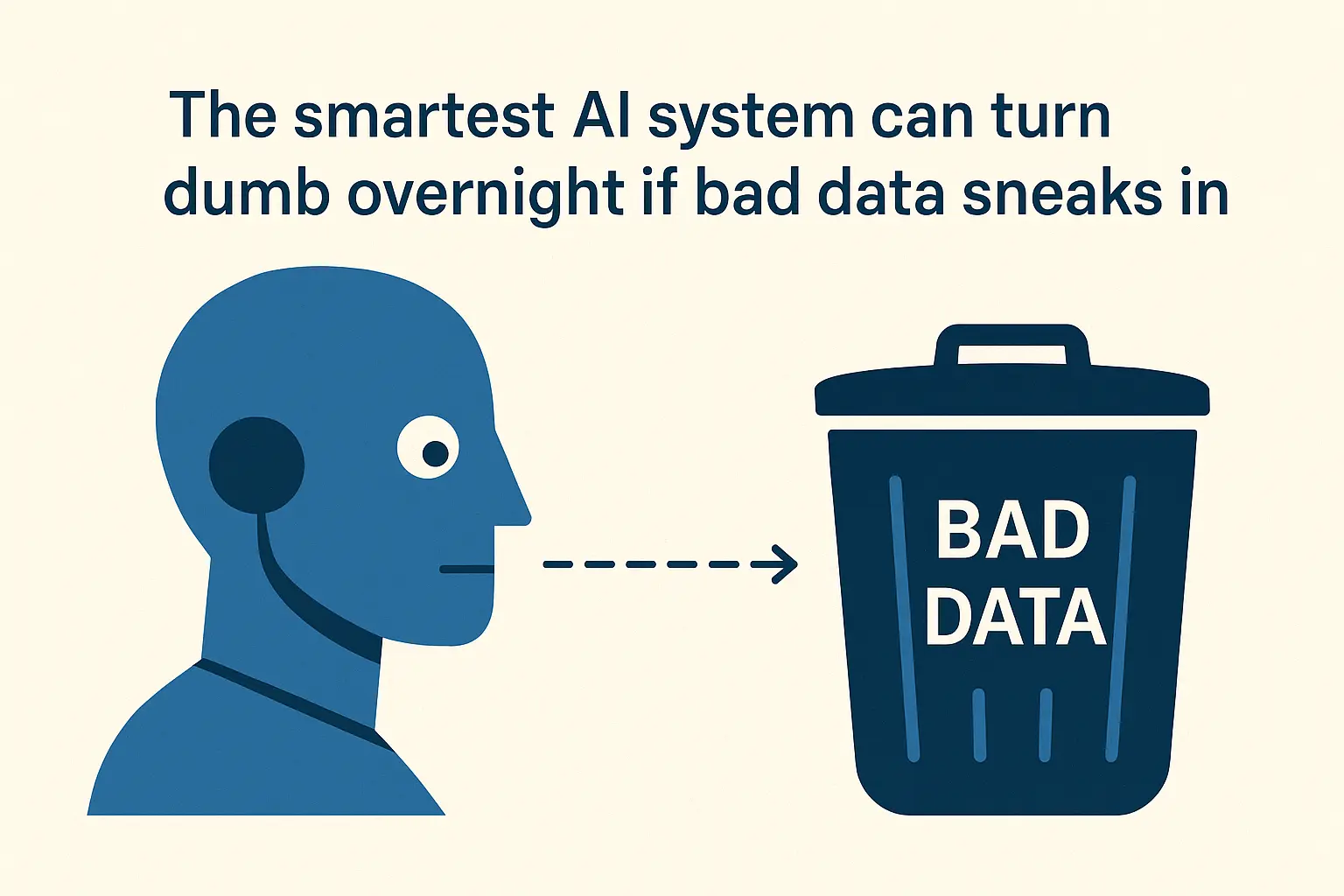

We often get caught up in the excitement of powerful AI models like GPTs, transformers, embeddings, all the buzzwords that make AI sound futuristic. But beneath all that brilliance lies a simple truth: even the smartest AI system can turn unreliable overnight if poor-quality data slips in. This phenomenon, known as the Garbage-In, Garbage-Out (GIGO) problem, is one of the biggest reasons enterprise AI projects fail silently.

The issue isn’t the model, it’s the data feeding it. The solution, however, isn’t about adding more tools or frameworks; it’s about enforcing discipline through data contracts and guardrails that ensure only clean, validated, and reliable data ever reaches your AI systems.

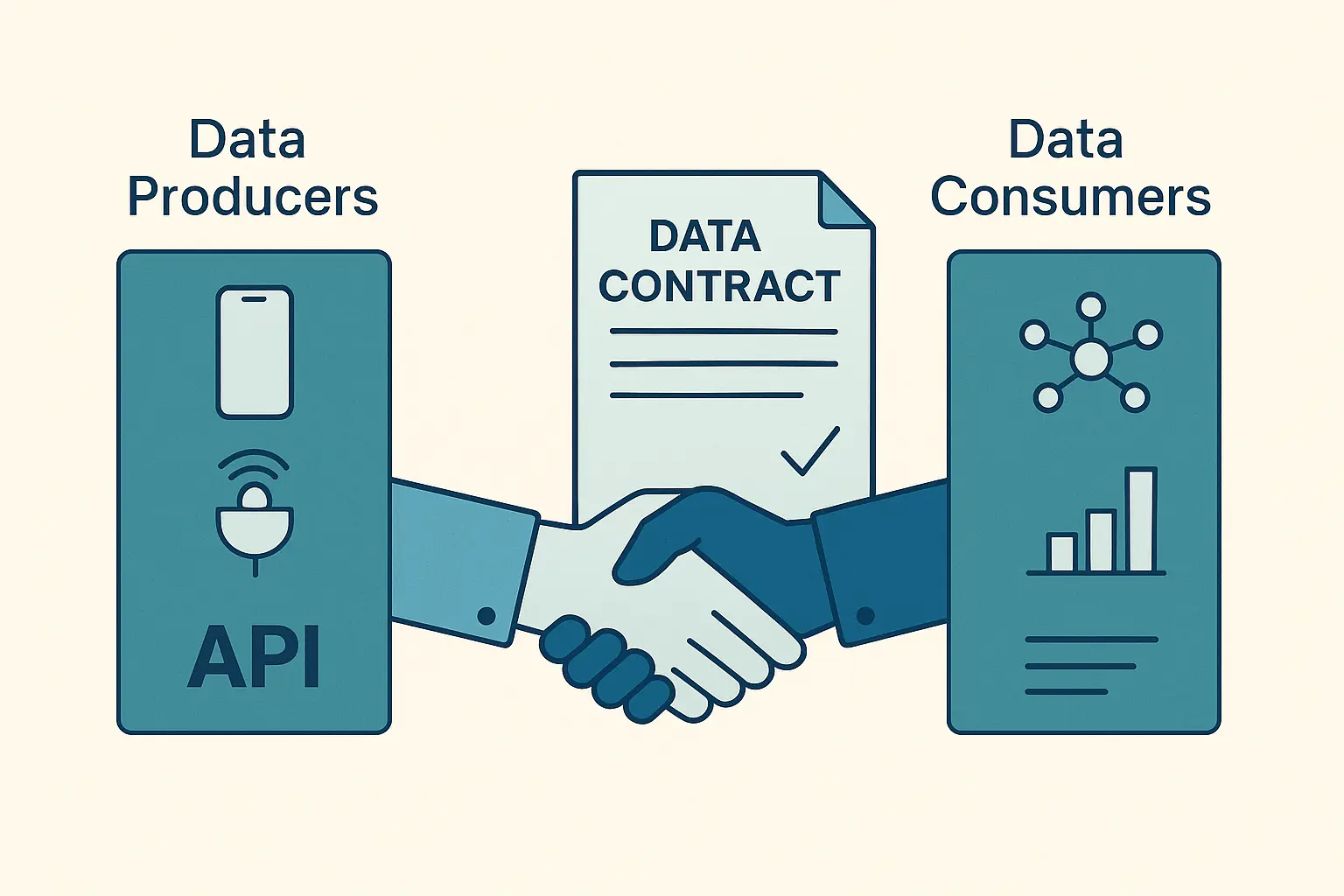

What Are Data Contracts (and Why Every AI System Needs Them)

A data contract is essentially a handshake between your data producers like apps, sensors, or APIs and your data consumers, such as AI models or analytics systems. It’s a mutual agreement that defines exactly what data format, quality, and rules will be followed, ensuring consistency and trust. In simple terms, it’s a promise that says, “Here’s the data structure I’ll deliver, and if I ever break it, the system will know.” Without such contracts, even a small change like a missing timestamp or a renamed field can silently corrupt downstream training data and compromise model accuracy.

Schema Validation (Catching Errors Before They Spread)

Once data enters your pipeline, schema validation steps in like airport security.

It checks every record:

- Are the data types correct?

- Are mandatory fields filled?

- Do values make logical sense?

For instance, if a shipment status says “Delivered” but the delivery timestamp is “null”, that’s a flag. Tools like Great Expectations or Pydantic can automatically validate these schemas at scale.

Why does it matter? Because schema mistakes are sneaky, they don’t crash your system, they quietly mis-train your models.

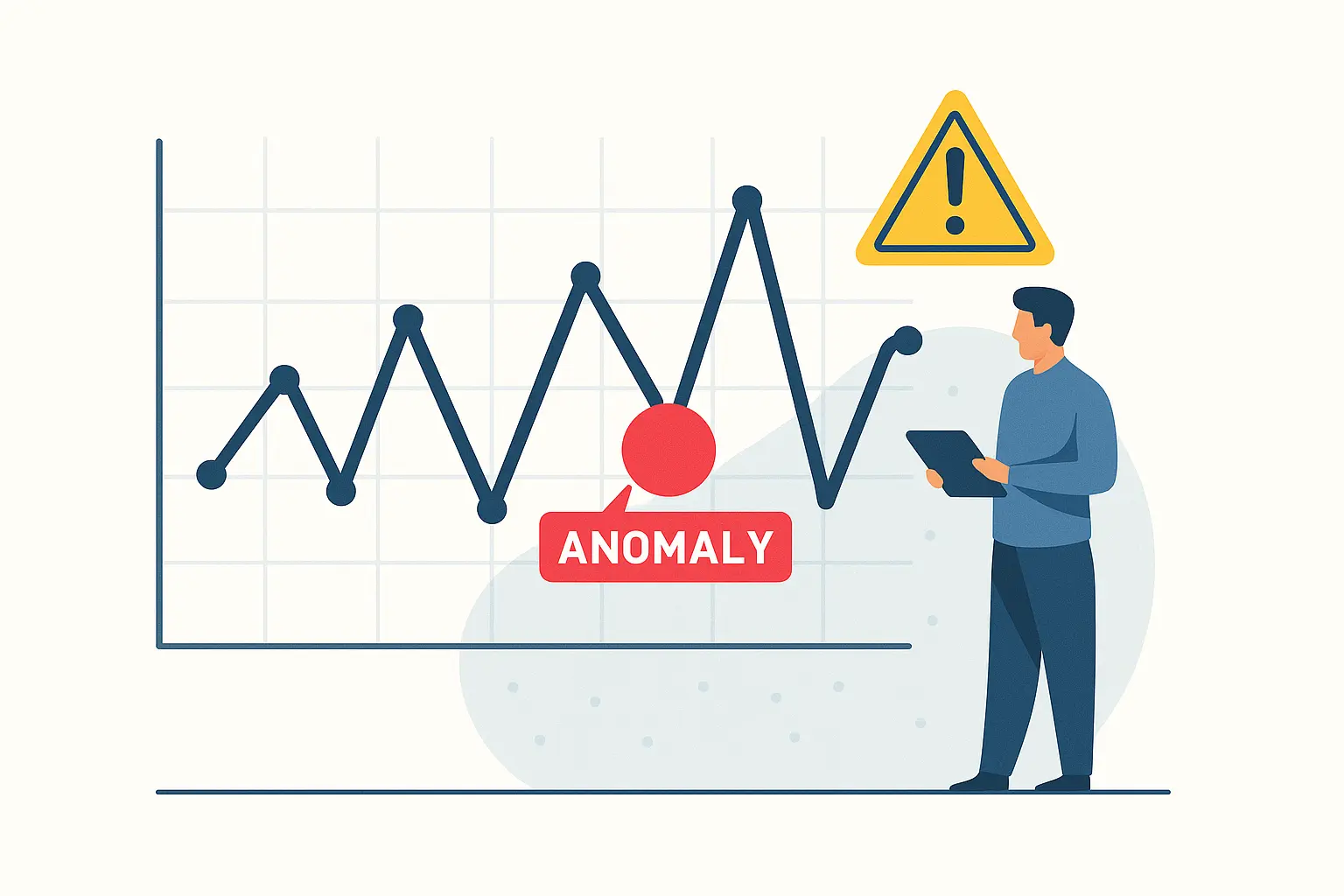

Anomaly Detection (Because Clean Data Can Still Be Wrong)

Even when your data schema is flawless, your numbers can still mislead you. Imagine a sudden 90% drop in warehouse shipments. Is that a real business issue or just a faulty sensor? This is where anomaly detection plays a crucial role. Through statistical analysis or ML-based detectors, it identifies irregularities such as outliers, missing ranges, duplicate entries, or sudden spikes and drops that could silently corrupt your data.

These anomalies don’t just distort analytics, they contaminate your AI training sets, leading to poor model performance. That’s why detecting and isolating anomalies before retraining is essential. As we often say, AI doesn’t fail loudly, it drifts quietly, and anomaly detection is what keeps that drift in check.

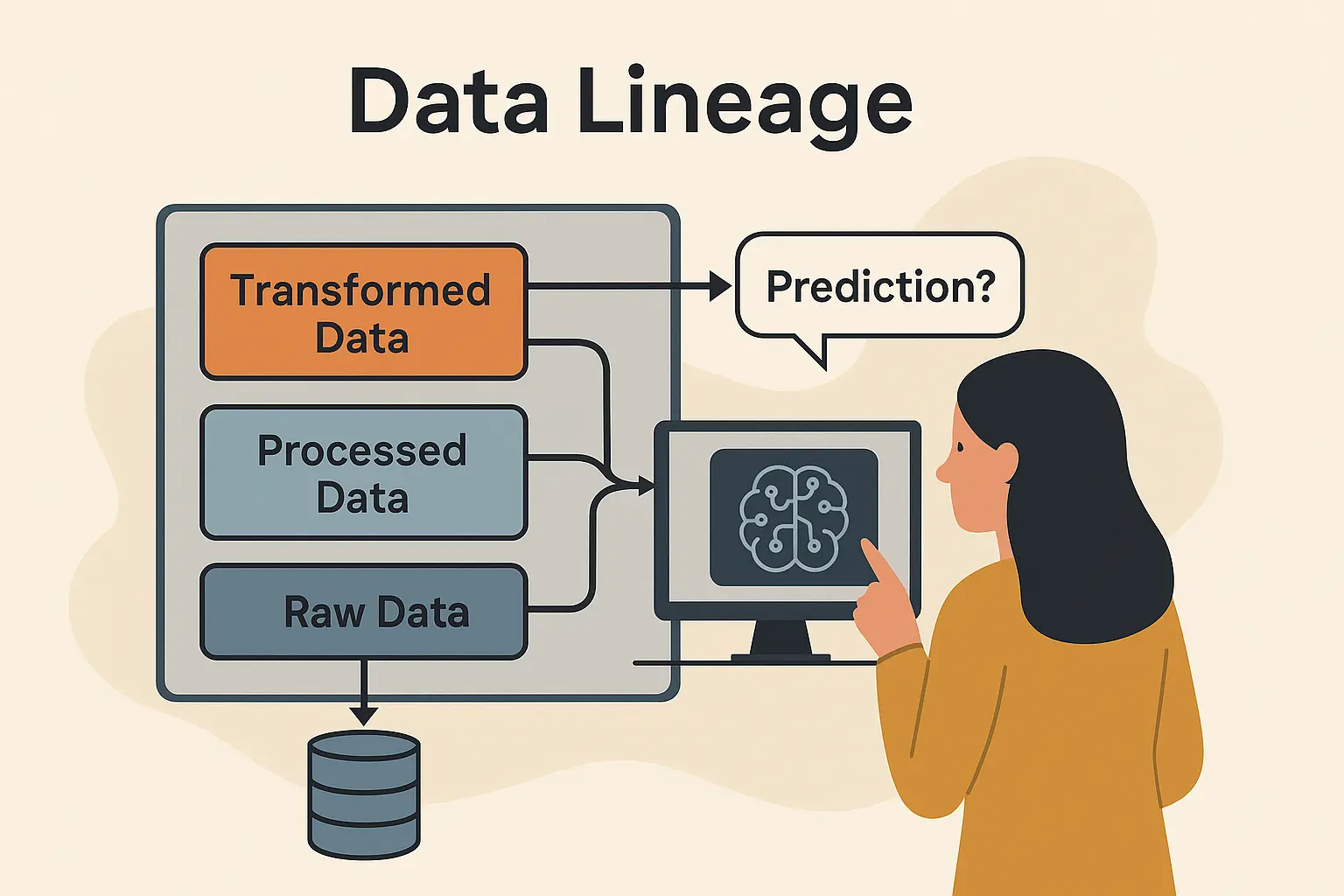

Data Lineage (Knowing Where Your Data Came From)

In enterprise AI, traceability equals trust.

When a model gives a weird output, you should be able to ask:

“Which data led to this prediction?”

That’s where data lineage comes in. It’s the ability to trace every piece of data, where it originated, how it was transformed, and which model used it.

We implement tools like DataHub and OpenLineage to map every hop your data takes from ingestion to inference. So if something breaks, you know exactly where to look. Lineage is also critical for compliance. In healthcare, finance, and logistics, it’s not enough to have accurate predictions, you need auditable AI.

Guardrails (Keeping Humans in the Loop)

Even with the cleanest data pipelines, surprises happen. New formats, unexpected sources, or unplanned schema changes can throw off your flow.

Guardrails are the human safety nets built into automation:

- Role-based access control

- Approval workflows for schema changes

- PII scrubbing and compliance filters

- Drift monitoring dashboards

In one of our healthcare AI deployments, these guardrails prevented sensitive patient information from entering non-compliant model logs. The key idea is Guardrails don’t slow innovation, they make it sustainable.

Conclusion

You can’t scale intelligence on unstable data. Every successful AI story begins not with a complex model or a breakthrough algorithm, but with something far more fundamental, that is clean, consistent, contract-verified data. It’s the quiet hero that powers every intelligent system behind the scenes.

Before you chase the next big model upgrade or experiment with the latest architecture, pause and ask yourself:

“Can my data be trusted every single time?”

If the answer isn’t a confident yes, that’s your signal to focus on data guardrails. These systems ensure that every dataset feeding your AI is validated, traceable, and protected against silent corruption. And that’s exactly where Nyx Wolves can help build the invisible backbone of trust that makes AI truly scalable, dependable, and enterprise-ready.