Protect Your Data: Why Self Hosted AI Chatbots Are the Safer Choice for Businesses

Self hosted AI chatbots keep customer and business data in house, reducing privacy risk and compliance exposure while delivering secure, high quality AI support.

Self hosted AI chatbots and why hosting model affects data security

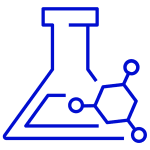

A self hosted AI chatbot is an AI assistant deployed within infrastructure controlled by the business. This is commonly implemented as an on premise chatbot, a private cloud chatbot inside a virtual private cloud, or a hybrid chatbot architecture that keeps sensitive data within a secure network boundary.

The core security advantage is control. The organization controls the full data flow: where data is processed, where data is stored, how long data is retained, and who can access it. In contrast, a SaaS chatbot platform introduces additional parties, additional processing layers, and additional storage locations that may not align with enterprise security standards or data residency requirements.

From a risk perspective, AI chatbot hosting is not a convenient decision. It is a privacy and security architecture decision.

AI chatbot data privacy: why chatbots increase data exposure

AI chatbots can appear simple on the surface, but a production chatbot is a multi step pipeline. A single user message can trigger document retrieval, model inference, tool calls, and multiple layers of logging and monitoring.

These systems commonly generate or store data in the following places.

- Conversation transcripts and chat history

- Prompt logs and model inputs

- Retrieved document snippets used in responses

- Vector embeddings created from internal documents

- Application logs and debug traces

- Monitoring and analytics dashboards

- Backups and replicated storage

Each location creates a potential exposure point. The privacy risk is not limited to model behavior. It includes the entire lifecycle of conversational data and the systems that store or process it. Self hosted AI chatbots reduce exposure by keeping these artifacts inside the organization’s controlled environment and by enabling stricter policies around retention and access.

Data residency and compliance: why enterprises self host AI chatbots

Many organizations must comply with data residency policies, client confidentiality agreements, and regulatory frameworks such as ISO 27001, SOC 2, GDPR, HIPAA, and sector specific requirements. AI chatbot deployments can violate these requirements if data is processed or stored in regions outside approved boundaries.

With a self hosted AI chatbot, organizations can enforce residency controls at the infrastructure level:

- Compute and storage remain within an approved region

- Logs and backups follow the same residency constraints

- Network egress can be restricted to prevent cross border data transfers

- Audit evidence is easier to produce because the system remains within the enterprise control plane

For regulated industries, the ability to guarantee data location is a material security advantage. For enterprise procurement, it can be the difference between approval and rejection.

AI chatbot access control: preventing internal data leakage

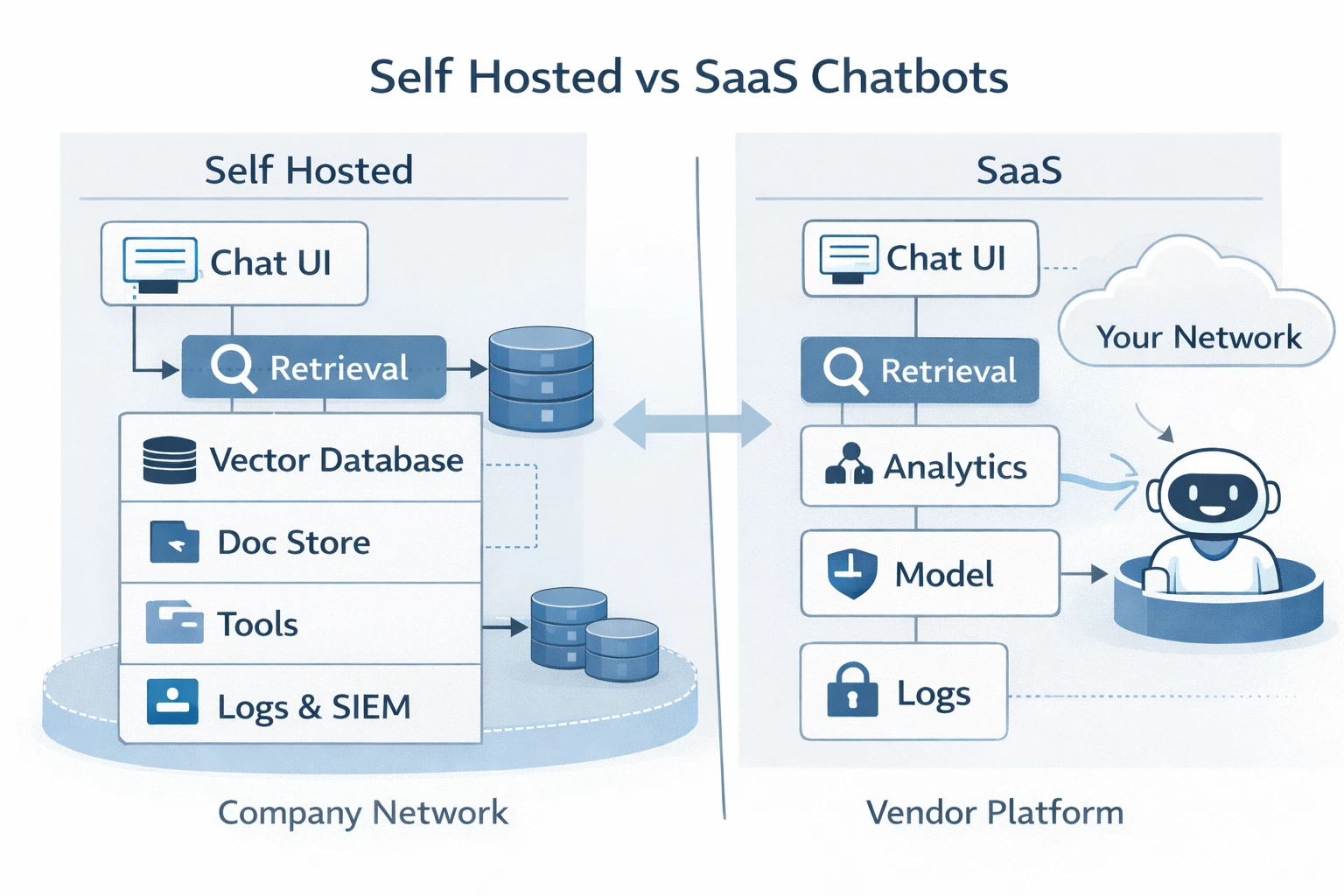

A common enterprise chatbot risk is improper authorization. This is typically not an external breach, but an internal data leak caused by weak access controls. A frequent failure pattern is that the chatbot retrieves content from restricted documents and surfaces it to users who are not authorized to view it.

This is mitigated through permission aware retrieval and role based access control, including SSO integration, retrieval time document level permissions, segmented knowledge sources, scoped tool access, and auditable access logs.

A common enterprise chatbot risk is improper authorization, which often results in internal data exposure rather than an external breach. The typical failure is permission blind retrieval: the chatbot pulls content from restricted documents and includes it in responses to users who are not authorized to view it.

This is mitigated with permission aware retrieval and role based access control, including SSO integration, retrieval time document permissions, segmented knowledge bases by role or department, scoped tool access, and auditable access logs. The objective is simple: the chatbot must never receive content the user is not allowed to access.

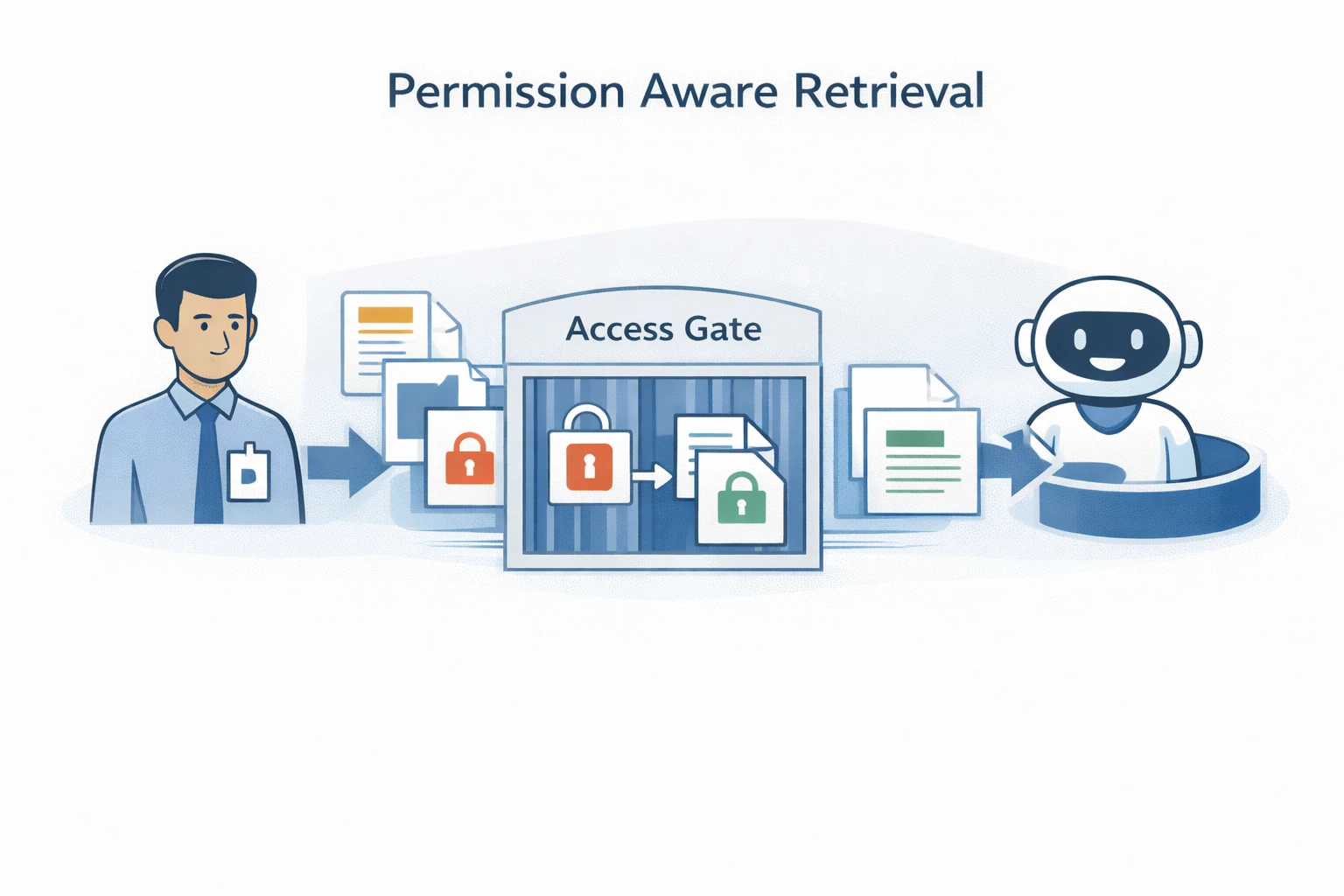

Data retention and logging: controlling chatbot transcripts and prompts

Chatbot transcripts and prompts often contain customer identifiers, employee data, operational details, and proprietary content, making uncontrolled retention a significant risk. Vendor hosted platforms may store prompts and responses for analytics or support, but enterprise governance typically requires tighter retention and access controls.

Self hosting enables stricter policies such as defined retention windows, metadata only logging, PII redaction, encryption with enterprise key management, restricted transcript access, and audit ready logging. For secure AI chatbot deployment, retention and logging must be designed intentionally, not added later.

Request a Secure Self Hosted AI Chatbot Assessment

Evaluate your current chatbot or planned AI assistant against data privacy, access control, retention, and compliance requirements, and receive a deployment recommendation for on premise or private cloud hosting.

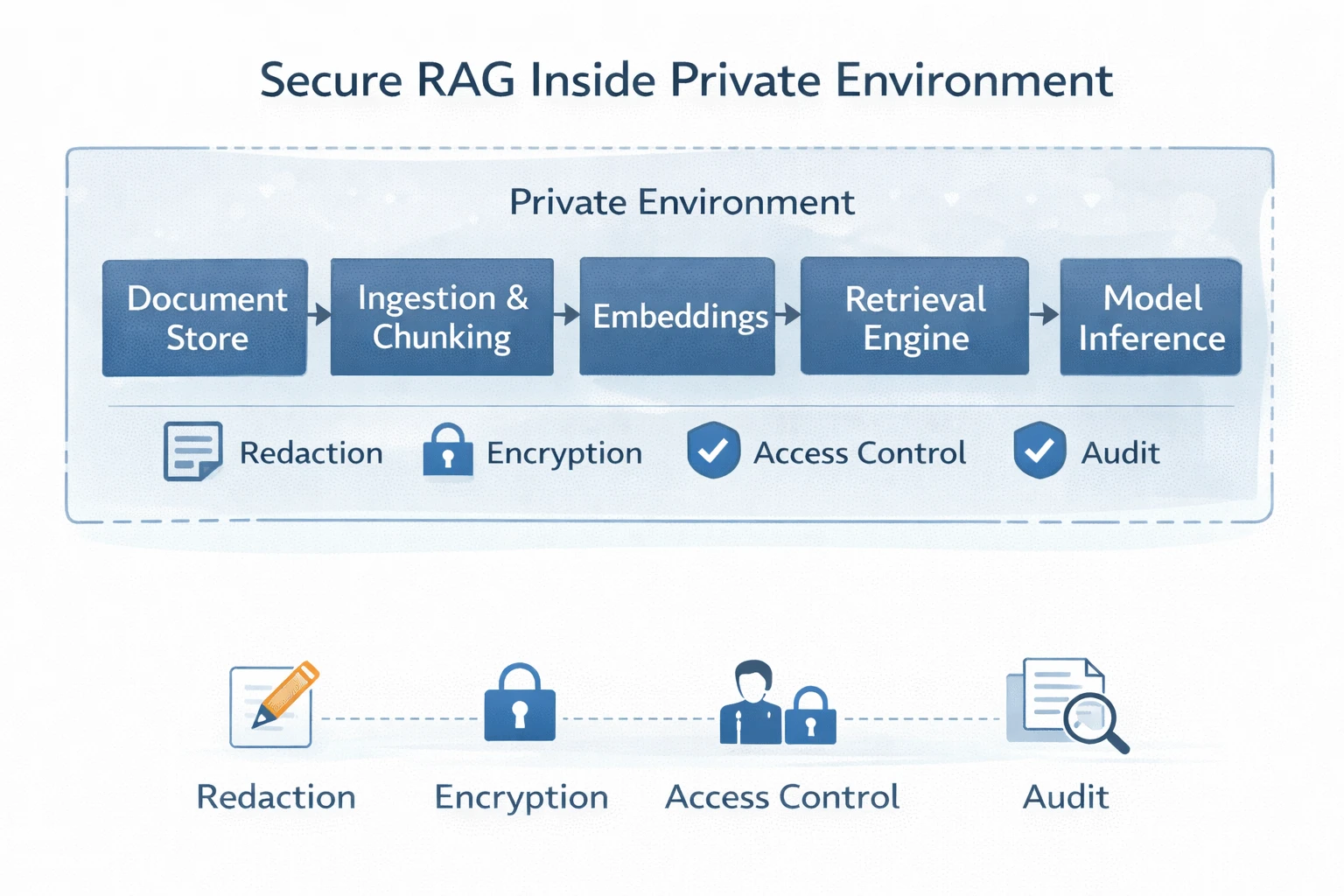

Secure RAG architecture: why self hosted retrieval improves security

Retrieval augmented generation improves chatbot accuracy by injecting relevant documents into the model context, but it increases security risk if retrieval is not tightly governed. Secure RAG requires control over document eligibility, user specific access, snippet and index storage, embedding protection, and output filtering to prevent sensitive data leakage.

Self hosted RAG is typically safer because the retrieval layer, vector database, and document store remain inside the organization’s security boundary, enabling permission aware retrieval and reducing external exposure. For many enterprises, secure RAG architecture is a primary factor in chatbot deployment decisions.

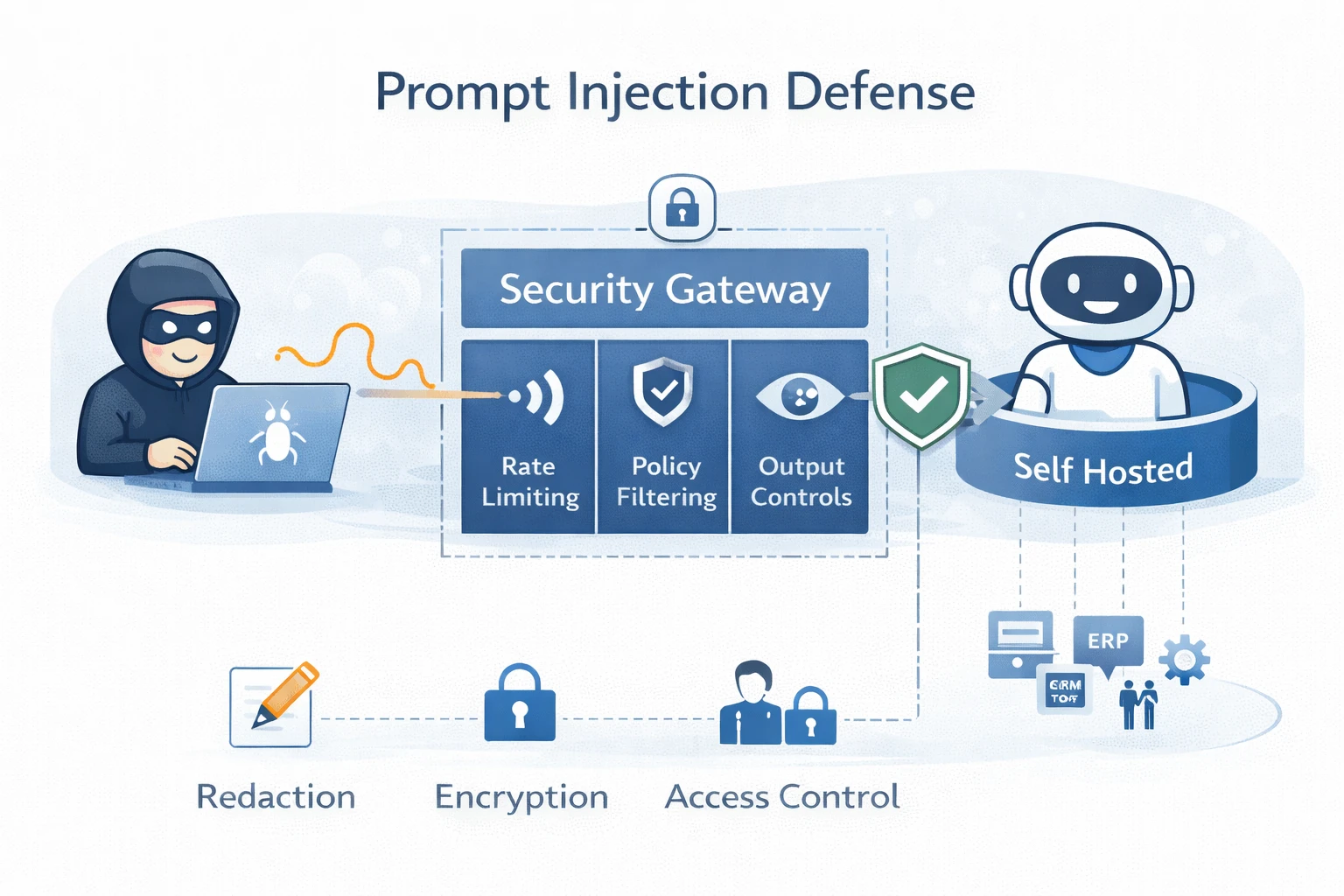

Prompt injection and tool security: protecting against data exfiltration

Prompt injection attempts to coerce a chatbot into revealing sensitive instructions, extracting confidential data, or abusing connected tools. Effective mitigation must be architectural, not dependent on the model alone.

Self hosted chatbots can enforce system level controls such as role and policy gated tool calls, permission and sensitivity constrained retrieval, secret and identifier output filtering, rate limiting with anomaly detection, and separation of high sensitivity knowledge sources. This defense in depth is easier to implement and validate when the full pipeline is under enterprise control.

Operational security and incident response: why self hosted improves readiness

Security includes detection, response, and recovery, not only prevention. Self hosted chatbots can integrate directly with enterprise security operations through SIEM logging, alerts for abnormal access or tool usage, rapid credential rotation and access revocation, isolation of compromised knowledge sources, and network level containment.

Vendor hosted platforms typically limit telemetry and place incident response behind vendor dashboards and support workflows, slowing containment. For regulated and high risk environments, incident response readiness is a key reason enterprises choose self hosted AI chatbot deployment.

Self hosted AI chatbot vs SaaS chatbot: when each model is appropriate

A SaaS chatbot can be suitable for low risk public use cases such as website FAQs using only public content and no transcript retention. Risk increases significantly when the chatbot handles sensitive data or connects to internal systems.

A self hosted AI chatbot is typically preferred for customer support with sensitive customer data, BFSI and banking, healthcare administration, government services, internal enterprise copilots for HR, finance, legal, and operations, integrations with CRM, ERP, ticketing, or identity systems, and deployments requiring strict data residency, auditability, and access controls. In these cases, self hosting is generally the safer and more defensible architecture.

Book a Demo of a Self Hosted Enterprise AI Chatbot

Conclusion: why self hosted AI chatbots are the safer choice for businesses

Self hosted AI chatbots strengthen security and governance by keeping sensitive data within the organization’s controlled environment and enabling direct enforcement of privacy and compliance controls. Key advantages include reduced external exposure, enforced data residency, stronger SSO and RBAC based access control, permission aware retrieval for secure RAG, disciplined transcript and prompt retention, improved incident response visibility, and stronger defenses against prompt injection and tool misuse.

For sensitive workflows, these controls are core requirements for safe, compliant, and reliable AI chatbot deployment.