Introduction

Large Language Models (LLMs) are at the core of modern AI applications, driving advancements in automation, decision-making, and interactive AI agents. With the rise of DeepSeek R1, discussions around hallucination rates, inference speed, compliance, and scalability have intensified, making it essential to compare the top contenders. In this guide, we analyze how DeepSeek R1 outperforms others and what factors you should consider when selecting an LLM for agentic AI applications.

Why DeepSeek R1 is Trending Worldwide

DeepSeek R1 has gained rapid attention for its unmatched hallucination rate (0.9%), fastest inference speed, and robust regulatory compliance. Developed in China, it is challenging Western AI dominance and is proving to be a top choice for enterprises looking for accuracy, efficiency, and security.

Key Considerations When Selecting an LLM for Agentic AI

When choosing an LLM for agentic AI applications, several factors must be evaluated:

1. Hallucination Rate

The accuracy of responses is crucial, especially in industries like healthcare, finance, and legal services. A lower hallucination rate ensures more reliable AI interactions.

2. Inference Speed

Faster models provide seamless user experiences, making them ideal for real-time applications such as virtual assistants and customer support bots.

3. Security & Compliance

Meeting GDPR, HIPAA, and ISO 27001 standards is essential for businesses operating in regulated industries.

4. Scalability

An LLM should efficiently handle increasing workloads, ensuring long-term operational sustainability.

5. Cost-Efficiency

Organizations must balance performance and cost when deploying AI models at scale.

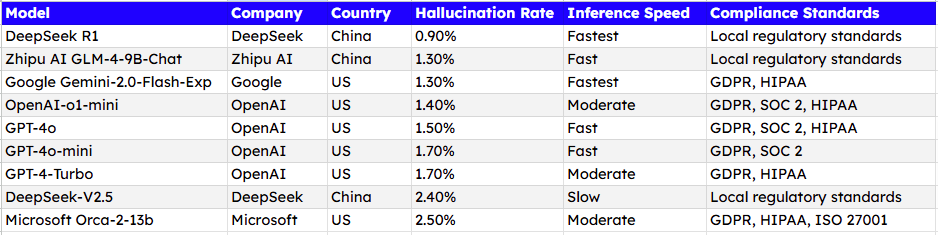

Top LLMs Ranked by Hallucination Rates and Performance

Best LLMs for Security and Compliance

For businesses prioritizing security, these models stand out:

- DeepSeek R1: Strong compliance with local regulatory standards and advanced security protocols.

- Google Gemini-2.0-Flash-Exp: Complies with GDPR, HIPAA, ensuring data protection.

- Microsoft Orca-2-13b: Aligns with GDPR, HIPAA, and ISO 27001.

Best LLMs for Speed and Performance

Speed is crucial for real-time AI applications. The top performers are:

- DeepSeek R1: Fastest response times, ideal for AI-driven automation.

- Google Gemini-2.0-Flash-Exp: Leading among Western-developed models.

- GPT-4o: Provides fast inference speeds suitable for customer interactions.

Best LLMs for Accuracy and Low Hallucination

For accuracy-focused applications, these models excel:

- DeepSeek R1: Lowest hallucination rate at 0.9%.

- Zhipu AI GLM-4-9B-Chat: Among the most accurate China-based LLMs.

- Google Gemini-2.0-Flash-Exp: Balances low hallucination with fast response times.

Balancing Cost and Scalability

Organizations looking for cost-effective solutions should consider:

- Intel Neural-Chat-7B-v3-3: Affordable with strong compliance features.

- GPT-4-Turbo: Provides a balance between performance and cost.

DeepSeek R1: The Future of Agentic AI

DeepSeek R1 is reshaping the AI landscape with its innovative capabilities:

- Multimodal AI: Processes text, images, audio, and video seamlessly.

- Unmatched reasoning accuracy (92%): Ensuring trustworthy AI interactions.

- Adaptive learning: Continuously improves performance over time.

- Industry dominance: Particularly excelling in healthcare, education, and research.

While OpenAI and Google still hold a competitive edge in cloud integrations, DeepSeek R1’s superior learning mechanisms and multimodal capabilities are setting new benchmarks for agentic AI.

Conclusion

Selecting the right LLM for agentic AI requires evaluating accuracy, compliance, speed, and cost. As of today, DeepSeek R1 is leading the pack with:

- Lowest hallucination rate (0.9%)

- Fastest inference speed

- Strong compliance with local regulations

Meanwhile, Google and OpenAI continue to offer balanced performance, making them reliable choices for Western enterprises.

Optimize Your AI Strategy

Need help choosing the best LLM for your business? Contact us today to explore AI solutions tailored to your needs.

FAQs

AI hallucinations occur when a model generates false or unsupported information, often due to limited training data or algorithmic biases.

Currently, DeepSeek R1 (0.9%) and Google Gemini-2.0-Flash-Exp (1.3%) lead in accuracy.

Essential standards include GDPR, HIPAA, SOC 2, and ISO 27001.

Evaluate hallucination rates, inference speed, compliance, scalability, and budget to find the best fit.

Not necessarily. Speed is important, but it should not come at the cost of accuracy and security.