Table of Contents

What is a Domain-Specific AI Assistant?

Think of a custom AI assistant as your company’s super-smart teammate, but trained just on your industry’s language, data, and challenges. Whether you’re in healthcare, finance, legal, or logistics, these assistants aren’t just pulling info from the internet, they’re tuned into your documents, your processes, and context.

That’s what makes them so powerful. Unlike generic chatbots, they give precise, relevant, and trustworthy answers. If you’re serious about building AI that actually works for your business, going domain-specific isn’t just smart, it’s essential.

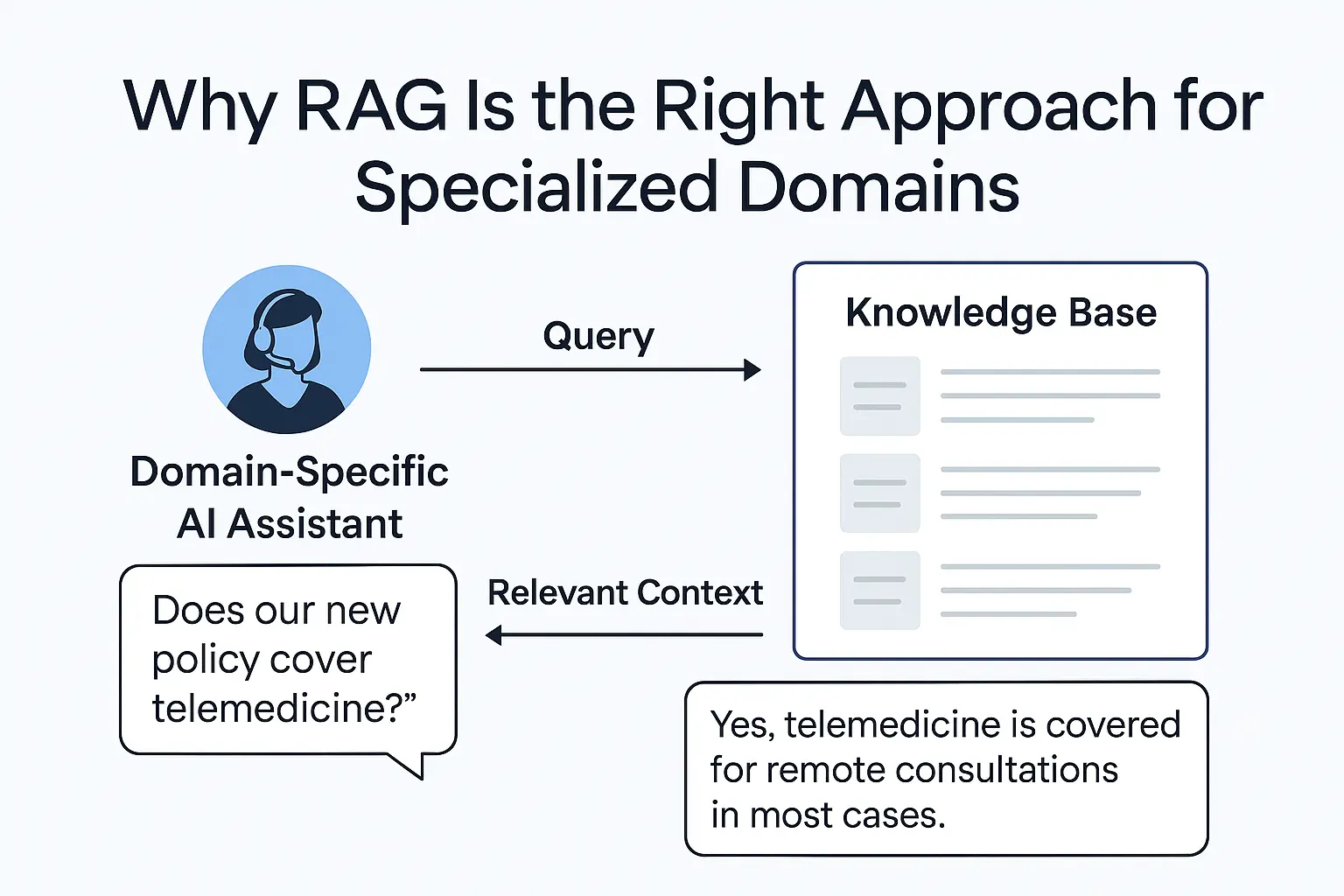

Why RAG Is the Right Approach for Specialized Domains?

Let’s be real, if your AI assistant is making up answers in a regulated or high-stakes industry, it’s doing more harm than good. That’s why RAG (Retrieval-Augmented Generation) is such a smart move. It connects a powerful language model with your actual business data, think SOPs, compliance docs, internal FAQs.

So every answer is backed by something real. No more hallucinations, just accurate, brand-aligned responses. If you’re building an AI assistant for healthcare, finance, legal, or enterprise ops, RAG isn’t optional, it’s essential.

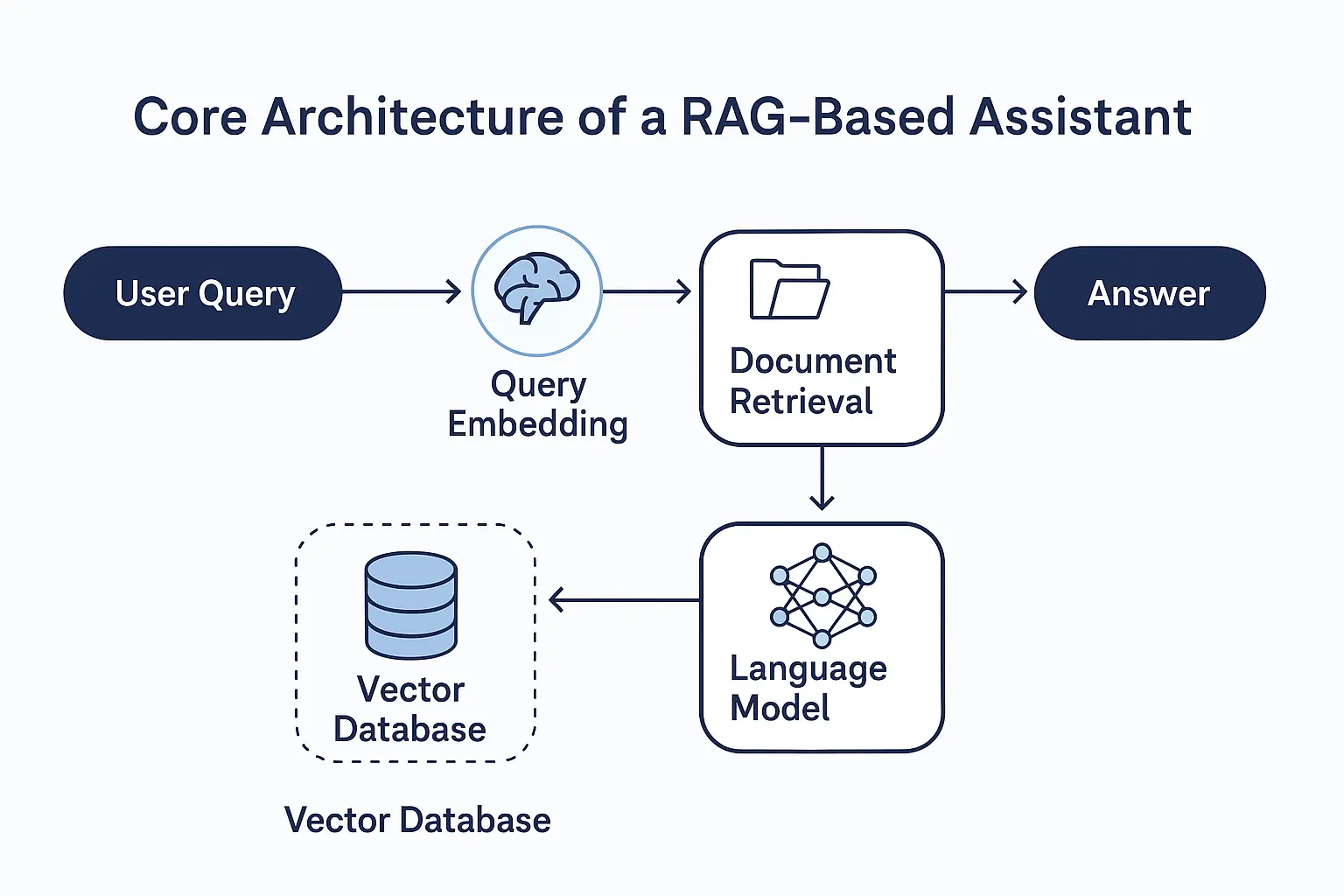

The Core Architecture of Our RAG-Based Assistants

So, how does a RAG based AI assistant actually work behind the scenes? It starts with a user query that gets embedded and compared against a vector database (like ChromaDB or FAISS) filled with your company’s documents. The most relevant pieces are retrieved and passed into the language model (LLM) along with the query.

That combo helps the model generate accurate, context-aware answers and not guesses. This architecture lets us build assistants that don’t just sound smart, but actually aren’t smart, because they pull insights directly from your trusted data sources every time they respond.

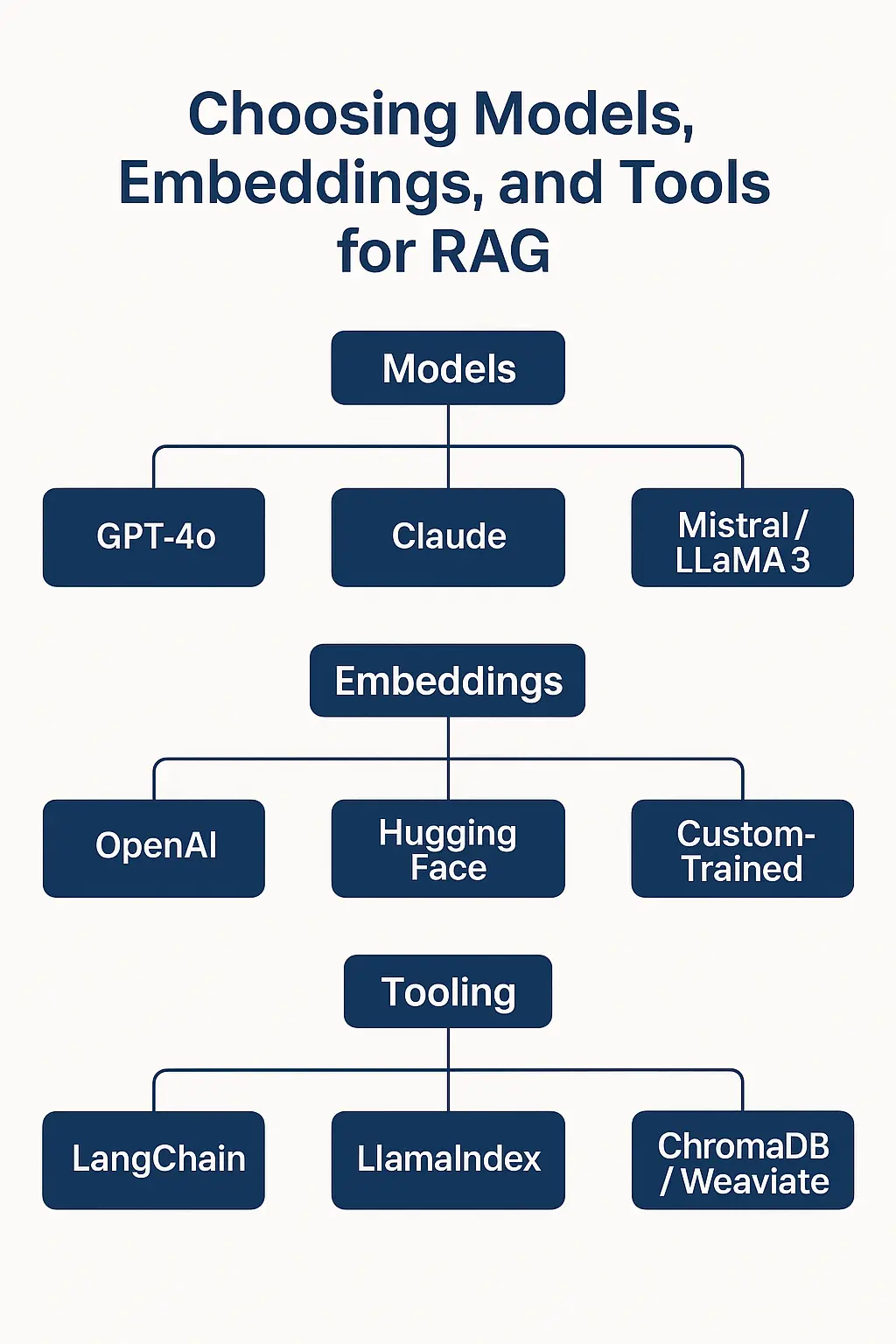

Choosing the Right Models, Embeddings, and Tools

When it comes to building a high-performing RAG assistant, your choice of tools can make or break the experience. We carefully select the right LLMs (like GPT-4o, Claude, or Mistral) based on the domain, response quality, and budget.

For embeddings, we rely on OpenAI, Hugging Face, or custom-trained ones that align better with niche vocabularies. On the tooling side, frameworks like LangChain, LlamaIndex, and vector stores like ChromaDB or Weaviate help us stitch everything together efficiently.

Each choice impacts accuracy, latency, and cost, so we balance flexibility with production-readiness every step of the way.

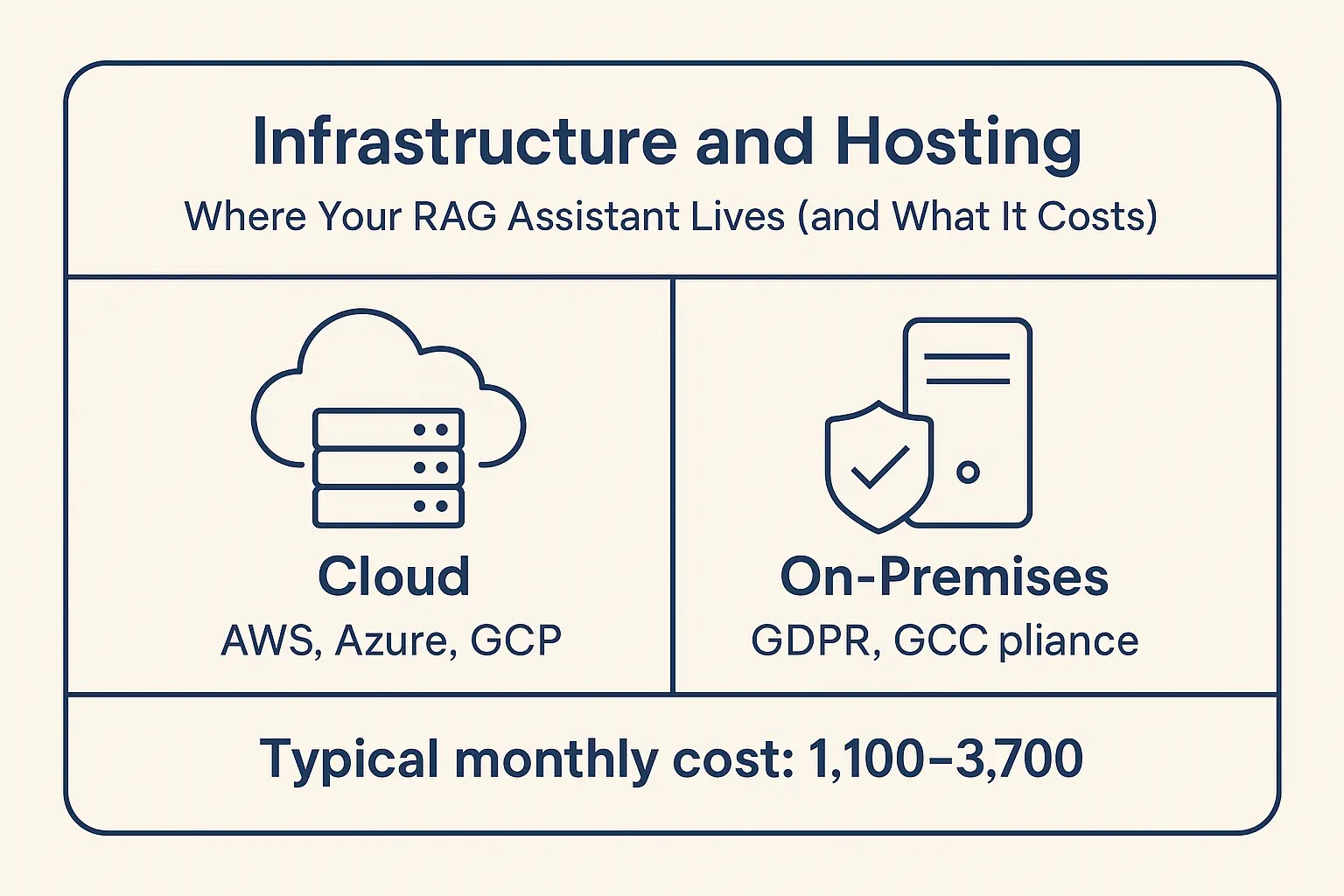

Infrastructure and Hosting: Where Your RAG Assistant Lives (and What It Costs)

Once your AI assistant is up and running, where you host it can seriously affect cost, speed, and compliance. For most businesses, cloud platforms like AWS, GCP, or Azure offer flexible, scalable hosting, especially when your assistant needs to handle spikes in traffic or multilingual queries in real time.

But if you’re in a region with strict data laws (like Saudi Arabia, UAE, or the EU), on-premise or region-specific hosting may be non-negotiable.

Hosting a RAG stack means provisioning GPU-enabled servers, securing persistent storage, and sometimes setting up Kubernetes clusters or serverless APIs. It’s not just about uptime , it’s about performance, compliance, and long-term scalability.

Maintenance & Support: Keeping Your AI Assistant Sharp Over Time

Building a custom RAG-based AI assistant is just the start, keeping it accurate, relevant, and reliable is an ongoing process. As your business evolves, new documents, terms, and edge cases will pop up, and your assistant needs to keep up. That’s where continuous fine-tuning, retrieval evaluation, and feedback loops come in. You’ll also want to monitor for hallucinations, especially in low-resource languages where models can drift.

For sensitive industries, a human-in-the-loop (HITL) setup ensures the AI escalates anything unclear or risky. Think of maintenance not as a cost, but as a safeguard, one that keeps your assistant trustworthy and useful at scale.

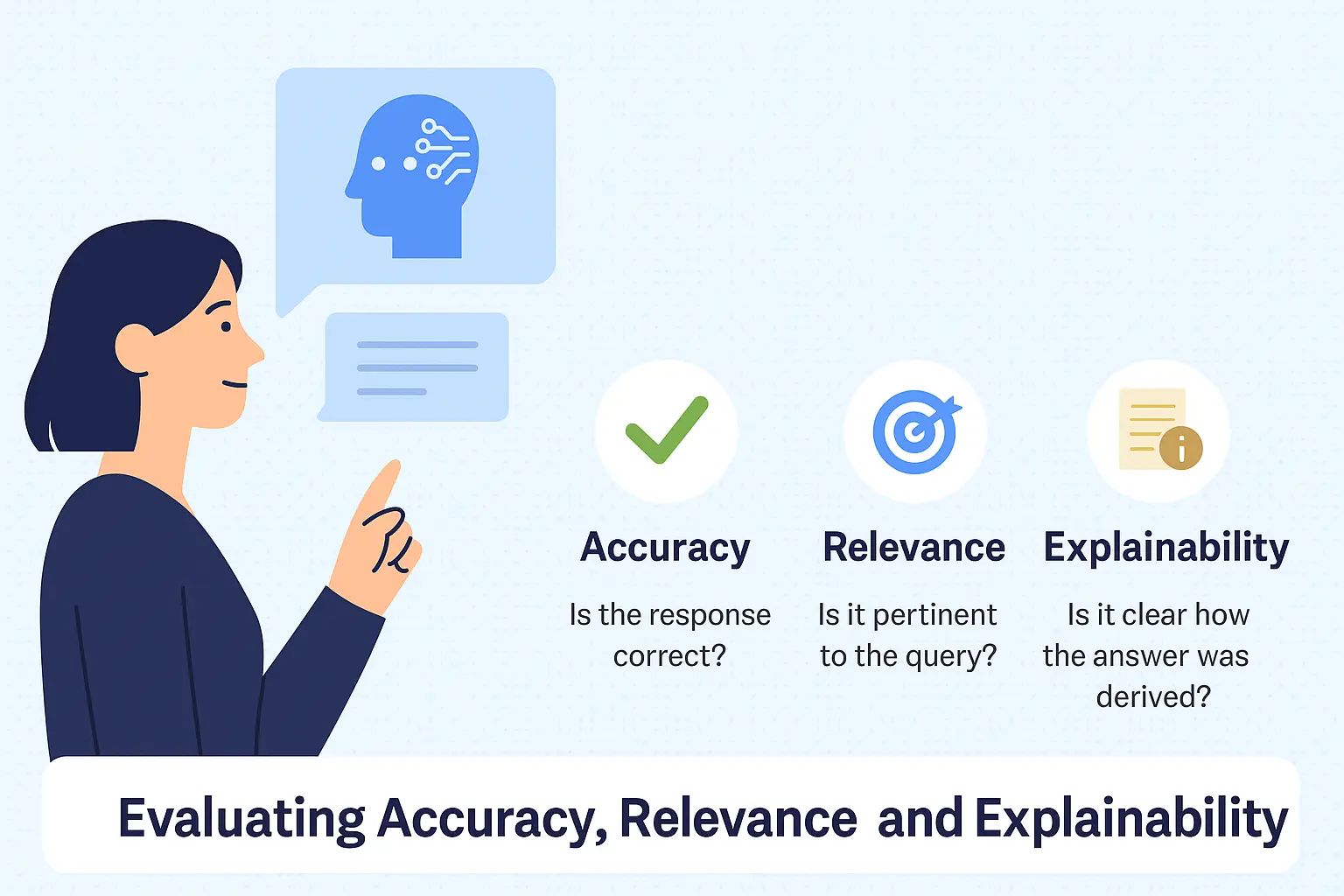

Evaluating Accuracy, Relevance, and Explainability

It’s not enough for your AI assistant to sound smart, it needs to be right. Evaluating a RAG system means digging into how accurate, how relevant, and how explainable its answers are. We use metrics like precision@k, retrieval overlap, and human-graded QA scores to see if the assistant is pulling the right context and delivering useful responses.

For regulated industries, explainability becomes critical, your AI needs to show why it gave an answer and where the information came from. We build feedback loops and dashboards so you can track performance and continuously improve. In short: if you can’t measure it, you can’t trust it!

Real-World Use Cases: How We Deploy RAG Assistants in the Wild

RAG-powered AI assistants really shine when tailored to real business workflows and we’ve deployed them across industries. In healthcare, assistants can retrieve medical protocols, summarize patient histories, and support triage conversations, all while staying HIPAA-compliant. In legal, they pull case law, draft clauses, and flag risky terms using internal precedents. In ecommerce, they power multilingual product search, handle customer queries, and support live order tracking.

What ties it all together? The assistant isn’t “guessing”, it’s retrieving verified info from your content and speaking in your brand’s tone. That’s where the magic (and ROI) happens.

Security, Privacy, and Access Control: Protecting Your Data with RAG

When you’re building an AI assistant that taps into sensitive business data, security can’t be an afterthought. With RAG systems, we implement strict access controls, ensuring the assistant only retrieves and responds with documents the user is authorized to see. That means integrating role-based permissions, API key management, and even document-level encryption when needed.

For industries operating under GDPR, HIPAA, or GCC regulations, we support region-specific hosting, audit trails, and enterprise-grade compliance workflows. Bottom line: your assistant should be as secure and private as the data it was built on and we make sure it is.

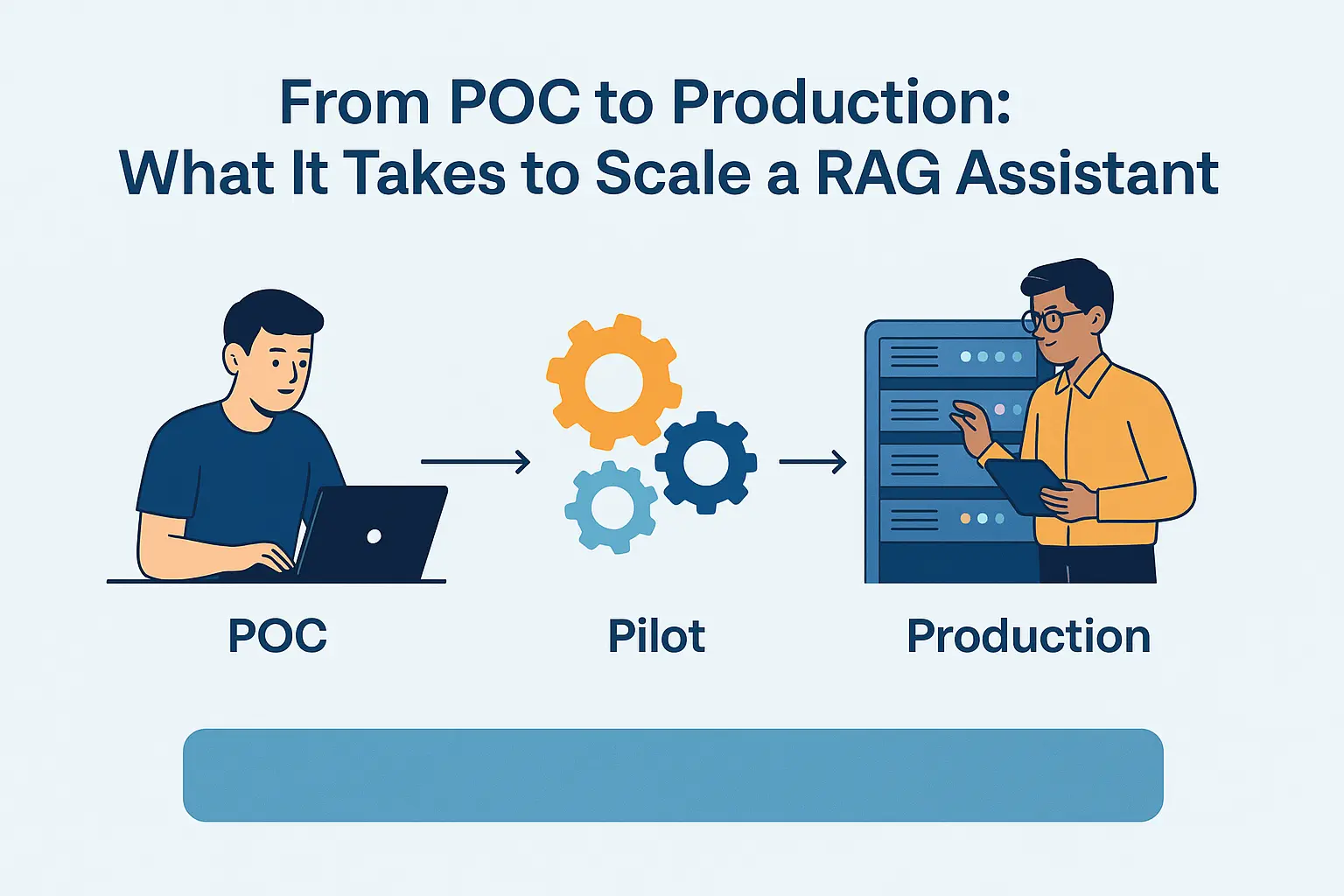

From POC to Production: What It Takes to Scale a RAG Assistant

Launching a prototype is easy, but scaling a domain-specific AI assistant into production? That’s where most teams hit a wall.

We guide our partners through every phase: from building a lean proof of concept (POC) to handling infrastructure, versioning, and monitoring at scale. That includes setting up automated retraining loops, feedback collection, token cost optimization, and integrating the assistant into real workflows (like CRMs, helpdesks, or intranets).

We also track model drift, manage uptime, and align the assistant with business KPIs. The goal isn’t just to launch an AI tool, it’s to run one that continuously delivers value.